This Is As Good As It Gets: Visions of a Post-LLM/Diffusion World

We may well have been born too late to explore the earth and too early to explore space, but we have found ourselves in the middle of the AI golden age. But what about the next generation of AI?

Model collapse probably isn’t something you’ve heard of, yet anyway. It’s the slow degradation of models as they are trained on AI generated examples. The strangeness that can sometimes accompany AI generated pieces of work like hands with too many fingers, are turned up to 111 as more examples have extra fingers than have 5. It’s something I think we’re going to be hearing about a lot more, as AI generated content makes its way to social media, eventually to be scraped by the next generation of AI.

We may well have been born too late to explore the earth and too early to explore space, but we have found ourselves in the middle of the AI golden age. This is my argument, at it’s core: AI won’t see a massive improvement like this again, in fact it will probably get worse, unless we can find foolproof ways to detect AI, the generated examples will slip in, slowly degrading models. ChatGPT’s GPT 3.5 may well be remembered as the best LLM, not in 2023, but ever.

That time Google Translate told us about the End Times

In 2018 Google Translate made obscure internet news, as typing “dog” 19 times prophesied the end times, you can find many more of these on the r/TranslateGate subreddit. As many users panicked on what exactly Google Translate was trained on or looked to supernatural explanations. When you dig into how Google Translate works, it does begin to make sense why this happened, and it’s a phenomena that AI enthusiasts are well aware of now, hallucination.

So how did this happen? Well Google Translate is trained on what are called parallel texts, a Rosetta Stone. That means it has multiple translations of the same piece of text, and can go from A→B B→C and with some clever maths also A→C. The most widely available text by number of translations? The Bible. So it perhaps comes at no surprise that these hallucinations are themed in this particular brand of prophecy and the end times. But why does dog dog dog make it hallucinate? The language is important: The less examples you have of a language the more you’re relying on smaller and smaller datasets, or clever maths to link two languages without any links. Couple this with the fact that all models will be biased towards producing *something*, and you have a small little piece of the training data popping out. As you might expect, this only works with gibberish, because there are no examples of how to translate 19 occurrences of the word dog one after another in Maori, no one has written that sentence yet.

We can see another similar affect in “early” image generation, Google Deep Dream was originally designed to recognise faces from images, but when run in reverse it produces artwork filled of eyes, lips, and dogs. It’s repeating those patterns from the training data, faces have eyes after all.

AI generation at its core is far more reliant on training data than you would assume. The entire problem of models being overfit to their training data is a huge problem. Essentially what you do when you create a model is use just enough training data so that it can solve whatever problem you throw at it, but still generalise to examples outside it’s training data. We need it to produce new sentences, but not with words in a new order, in order for the model to be correct it still needs to read like human langauge. Large models like the ones that power LLMs need a lot of training examples, and rely on human examples from places like social media, the copyright of which are still being argued in the courts.

There is a growing demand in artist communities online to intentionally poison AI generated art. With tools such as Nightshade or Glaze, these tools make imperceptible changes to the pixels, so a human cannot tell the difference between them but a AI model will. The idea behind these tools are to give human artists, who often have their work scraped from their portfolios on websites like Art Station, an ‘opt-out’ of sorts. These tools can be hit or miss and many artists worry about the There are bypasses of course, and it’s leading to an arms race as humans try to defend their copyright.

Hopefully this has convinced you just how reliant generative AI is on training data, but if not hopefully this from Stability AI, the company behind Stable Diffusion says on their Dance Diffusion model. I’m not going to get into AI art on this post but I highly recommend, this video by artist Steven Zapata if you’re interested in hearing more about the so called AI War waged between AI and human artists.

Dance Diffusion is also built on datasets composed entirely of copyright-free and voluntarily provided music and audio samples. Because diffusion models are prone to memorization and overfitting, releasing a model trained on copyrighted data could potentially result in legal issues.

The AI Golden Age?

Ever since LLMs like ChatGPT and diffusion models such as Stable Diffusions have become publicly available, AI generated content online has exploded. This content might use AI to inspire or offer structure but be written by a human, be human written but edited by AI, or be completely written by an AI. We have Amazon listings completely generated by ChatGPT as it apologises for not being able to fulfil the request for a product name due to OpenAI’s policies.

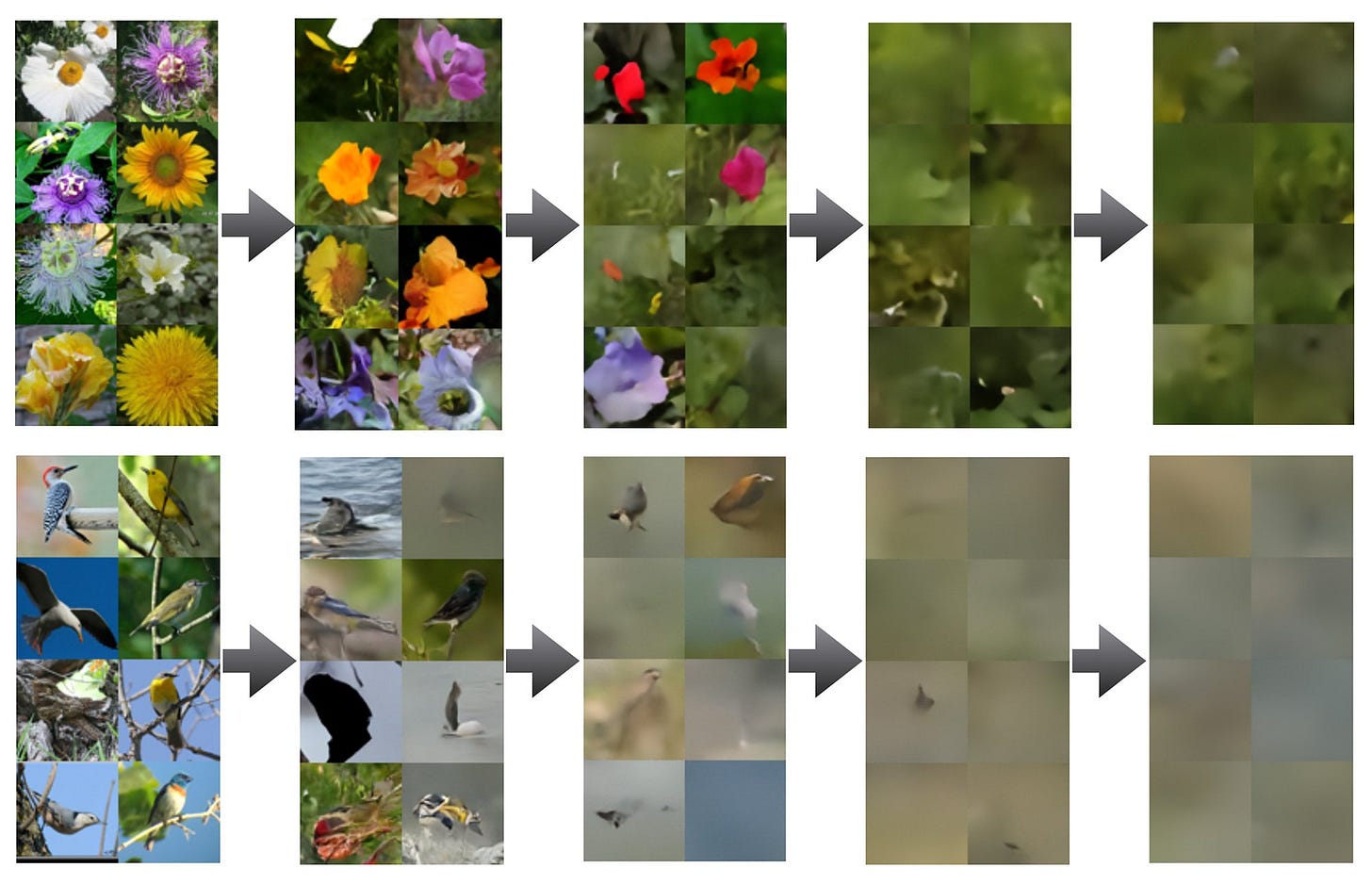

Model collapse was coined in this paper. and it describes a recursive effect on models as they are trained on AI data. There’s a lot of maths in here to demonstrate this effect, and even fine-tuned models are vulnerable, it happens quickly (within 10 generations), as the small errors begin to become part of the requested output. Model collapse is fundamentally the AI learning that AI artefacts are required because they are in its training data. In each generation the errors and artefacts become more and more common. Until all that remains is the error.

And this gets to the crux of this post, prior to ChatGPT and DALLE Mini AI generated content was rare. We can see early language models with projects like Subreddit Simulator, which trains bots on the content of a single subreddit. After being exposed to ChatGPT these posts are crude and non-sensical. Now AI generated text is good, so good we can’t easily tell if something is AI generated or human produced and neither can AI.

Detecting AI generated content is a multi-million pound business for the education sector, as teachers and professors scramble to adjust to a post-GPT education sector. Thankfully plagiarism detectors like TurnItIn have launched their AI writing detection, the only problem? They don’t work. Advanced spellcheck tools like Grammarly can easily be flagged as AI generated because they use similar techniques. On the other side you have tools like Undetectable which provides both a detection tool and a humanise tool - a tool that will change AI generated text to pass current detection tools. Fundamentally: If we can’t detect if a piece of text is AI generated, and we train models on internet scale text, we are going to train models on AI generated content and a lot of it.

So detecting AI text at the model training set is out, so what other options do we have? There are a few solutions here, maybe we could train on books instead of social media posts? Nope, AI books are already flooding the marketplace. Maybe we could train on professional writers such as journalists? Nope, 80% of organisations are expecting an increased use of AI. In the realm of image generator maybe we can rely on human judges? I’m afraid AI has already passed off its work as human. So what are we left with? Well what if we train our AI on content prior to 2022, when ChatGPT became widely available? We already have the datasets already. This might be as good as AI will get, as close to the dawn of large models as possible.